Our work is now in our UCL spinout company, MotionInput Games!

Please visit us there for our latest efforts (version 3.4 and onwards, 2024+)

www.motioninputgames.com

MotionInput v3.0-3.2 (Legacy and Testing) Technical Preview Downloads

This software is still in development. It has been made available for Community and Feedback purposes and is free for non-commercial personal use.

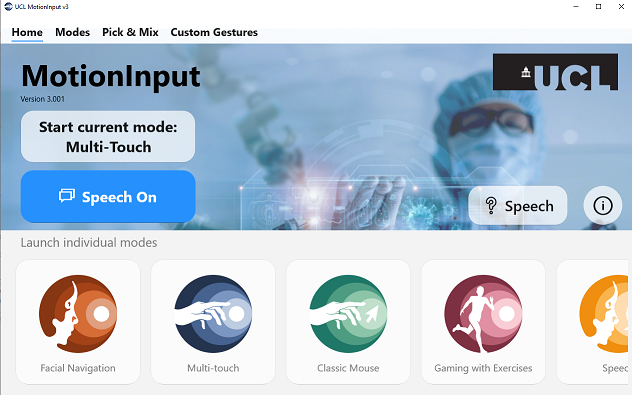

MotionInput is a gestures and speech based recognition layer for interacting with operating systems, applications and games via a webcam.

Latest Versions: 3.2 In-Air Multitouch and 3.2 Facial Navigation as of April 2023 - "MI3"

These two apps are now available on the Microsoft Store (search for "UCL") in the UK, USA and Canada, and on the UCL XIP platform (all regions) to download.

For full instructions on the updated Facial Navigation software, please visit https://www.facenav.org.

For feedback, software feature requests and commercial licensing terms, please contact us via the UCL Community Feedback Form.

For information about software licencing please click on the UCL Non-Commercial Use Licence.

Project Homepage for more details

Windows Applications

Requirements:

- Windows 10/11

- 4GB+ RAM

- A webcam with microphone

- 1GB free space per application

- The latest Windows updates installed

Legacy Development Builds Below

Running prior releases of the software:

- If necessary, install ViGEMBus from here

- Press the download button next to the app you wish to explore

- Unzip the downloaded compressed folder. If you selected the Installer, run the installer and follow the instructions.

- If you selected Portable Zip, then extract the folder, and look for the MI3 executable Icon file in that folder to run.

If these steps are not successful for you, please see this FAQ and if that doesn't help, please use the Community Feedback Form or email form below to contact us and we will try to help.

(LEGACY) Features:

The first two Legacy Technical Preview applications, In-Air Multitouch and Facial Navigation, will enable the majority of touchless controls on Windows PCs. Both of these have speech capabilities by default. These two technical previews do not come with a GUI for settings, and have been preset with values.

Speech Transcribe and Commands Modes were made possible with UCL Ask-KITA software, which is based on VOSK engine.

The speech capability allows custom spoken phrases to be mapped to shortcut keys in any application on a Windows computer.

It allows live captioning and transcription and handles mouse and keyboard events

such as "click", "double click", "left arrow", "right arrow", "page up", "page down" and other commands. For more information on the speech commands available, the see the FAQ.

Some of the Speech Commands currently available in English:

- Say "click", "double click" and "right click" for those mouse buttons.

- In any editable text field, including in Word, Outlook, Teams etc; say "transcribe" and "stop transcribe" to start and stop transcription. Speak with short English sentences and pause, and it should appear.

- In any browser or office app, "page up" and "page down". "Cut", "Copy", "Paste", "left arrow", "right arrow" work as well.

- In powerpoint you can say "next", "previous", "show fullscreen" and "escape".

- "Windows run" will bring up the Windowskey+R dialog box.

- With the June 2022 3.01+ microbuild releases, you can say "hold left" to drag in a direction and hold the left mouse button. Say "release left" to let go.

- "Minimize" minimizes the Window in focus.

- "Maximize" maximizes the Window in focus.

- "Maximize left" or "maximize right" maximizes the Window size in focus, at 50% of the screen on either side.

- "Files" opens a file explorer window.

- "Start menu" opens the Window menu and, when said a second time, it closes the menu.

-

"Screenshot" is another useful command to try along with "paste" into MS Paint.

1. (LEGACY) MotionInput3 (MI3) In-Air Multitouch with speech

July 2022

Download In-Air Multitouch with Speech (these are experimental and legacy builds)

The In-Air Multitouch with speech app is a technical Windows 10/11 demonstrator to show two in-air touchpoints of hands in the air, by clicking index fingers with thumbs - try it out on browsing webpages in a browser, swiping photos etc.

Either run the Installer version to install to windows, or unzip from the Portable Zip version, all of the zip file's contents as it is in the folder, and run the program named MI3-Multitouch-3.03.exe

Gestures and their assignments:

- Index Pinch (Index and Thumb)– Windows Touchpoints Press

- Middle Pinch (Middle and Thumb) – Left Mouse Click (or just say "click")

- Ring Pinch (Ring and Thumb) – Double Click (or just say "double click")

- Pinky Pinch (Pinky Little Finger and Thumb) – Right Click (or just say "right click")

- Both right and left hands doing Index Pinch, moving in and out - Touchpoints Drag (zooming in a browser)

- You can also drag in-air by saying "hold left" (meaning, hold the left mouse button), and "release left" (to release the left mouse button).

- If you have multiple monitors, can use the other hand and pinky pinch, to move the mouse to the other screen.

The dominant hand is set to right by default and can be changed via JSON in data\mode_controller.json.

To change the dominant hand from right to left, open in data\events.json and rename the default mode from “touchpoint_right_hand” to “touchpoint_left_hand”.

Save the file and restart the program.

The speech commands as above are in this build.

2. (LEGACY) MotionInput3 (MI3) Facial Navigation with Speech

September 2022

This app will allow a user to navigate with either their nose (NoseNav), Multitouch in the air, or with Eye Gaze. They can either say commands, or use combinations of facial movements to act as mouse presses or keyboard keys.

Either install with the Installer version, or download the Portable Zip version, unzip all of the zip file's contents as it is in the folder, and run the program named MI3-FacialNavigation-3.04.exe

v3.04 for Facial Navigation features new commands for Visual Studio accessibility.

Note that this version is set for Nose Navigation with Speech by default, and you rotate through the modes with the phrase "Next Mode". If however, you wish to permanently change it to start with Eyegaze with Speech, replace the data\mode_controller.json file with mode_controller_eyegaze_(rename).json (rename it to mode_controller.json and backup that file).

From v3.03+, a new In-Range model has been added to detect from the maximum ranges you can move your neck.

Saying "left range" upon starting will set up the leftmost position marker, followed by saying "right range" will set the rightmost position marker. The size of the nose joystick will then shrink to match, and it should be more comfortable in moving within those ranges. Say "reset ranges" to go back to the default.

Head rotation has also been added which are mapped to left and right keyboard keys, by rotating your head left and right.

Here are some of the speech commands that are available when in speech modes. A full list can be seen by pressing "?" when running the software. When not in a speech mode in this microbuild (app) you will be in facial navigation mode (table below). It is currently English only but future versions will be multilingual.

Saying the "Next Mode" command will rotate through these options (*v3.03+):

- Nose Navigation (NoseNav) with Speech Commands

- Nose Navigation (NoseNav) with Facial Gestures

- Multitouch with Speech

- Eye Tracking (EyeGaze) with Speech Commands

- Eye Tracking (EyeGaze) with Facial Gestures

- For comfort where you are sitting or standing, If you are in NoseNav mode, say "butterfly" when you move your head, to reset the centre of the virtual nose joystick and move the area of interest box to where your head is at that point.

- Whenever you enter Eye Gaze you will run through calibration, which has onscreen instructions.

- In Eye Gaze modes, while watching in a direction, say "go" and cursor will start moving in that direction. Say "stop" to stop.

- For all speech modes, say "click", "double click" and "right click" for those mouse buttons. You can say "hold left" to drag in a direction and hold the left mouse button. Say "release left" to let go.

- In any editable text field, including in Word, Outlook, Teams etc; say "transcribe" and "stop transcribe" to start and stop transcription. Speak with short English sentences and pause, and it should appear.

- In any browser or office app, "page up" and "page down". "Cut", "Copy", "Paste", "left arrow", "right arrow" work as well.

- In powerpoint you can say "next", "previous", "show fullscreen" and "escape".

- "windows run" will bring up the windowskey+R dialog box.

- The other new speech commands in v3.02+ include minimize, maximize, screenshot and others. See the FAQ for the full list.

- For v3.04+ Visual Studio commands, see this blog here: https://hrussellzfac023.github.io/VisualNav/

The facial gestures in non-speech modes are:

| Nose | Eyes | |

|---|---|---|

| Smile | Left click and drag (v3.01+) | On/Off navigation |

| Fish Face | Left click | Left click |

| Eyebrow Raised | Double click | Double click |

| Mounth Open | Right click | Right click |

--------------------------

Experimental Featured Builds

--------------------------

We are looking for testers, gamers and accessibility experts to help with these software scenarios below.

The subsequent microbuild applications are also in the experimental GUI Suite.

(LEGACY) Experimental MotionInput3 (MI3) GUI Suite

Without Joypad requirements (v3.001 - April 2022)Note that the joypad features are not available in this build (v3.001 - April 2022).

You will also be asked to install DotNet 3.1, please use the Desktop, X64 version.

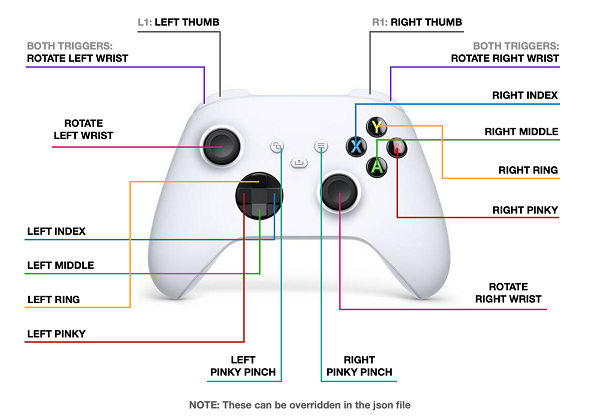

(LEGACY) MotionInput3 (MI3) Two handed In-Air joypad with Speech

April 2022

The in-air joypad microbuild is a technical Windows 10 demonstrator to show two handed in-air joypad control of Xbox compatible controller games. It requires ViGEM to be installed (vigem.org) before running it as the software uses vgamepad, the current versions (at time of writing) are in the zip file.

Unzip all of the zip file's contents as it is in the folder, and run the program named MI3-In-Air-Joypad-with-Ask-KITA.exe

Gestures and their assignments:

- To press start - pinch together the right thumb and the right little pinky finger

- Rotating left wrist - analogue left d-circle

- Rotating right wrist - analogue right d-circle

- Right hand fingers down - ABXY buttons

- Left hand fingers down - Up-Down-Left-Right d-pad buttons

- Right handed to left handed switching - edit the data\events.json file where it has args for right hand

- Speech is enabled via Ask-KITA so if there is a chat window, enable with "transcribe" and then you can speak. "Stop transcribe" to stop.

The speech commands as above are in this build.

(LEGACY) MotionInput3 (MI3) In-Air Inking with Speech

March 2022

The in-air inking microbuild (app) is a technical Windows 10/11 demonstrator to show native windows inking in the air, with depth calculations for ink thickness. It works with apps like Krita, OneNote, Fresh Paint, Photoshop and others. Speech is now available as commands and transcribe. You can hold a pen in your fingertips, or you can draw without any pen. The left hand will be used for erasing.

Unzip all of the zip file's contents as it is in the folder, and run the program named MI3-In-Air- Inking-with-Ask-KITA.exe. A calibration window will open. Follow the on-screen instructions to calibrate depth values. Once calibration is done, the main program will automatically start. Open any Windows Inking application such as Paint 3D, OneNote or FreshPaint. Move your hand forwards or back to control depth (ink thickness). Activate the eraser by doing an index-thumb pinch with your non-dominant hand. Calibration runs by default but can be turned off by simply editing the JSON file in data/config.json. To do this, open the config.json file, find the run_calibration variable, and set it to false. Save the file and restart the program.

Digital Inking in-air with Depth

(LEGACY) MotionInput3 (MI3) In-Air Keyboard

April 2022![]()

The in-air keyboard microbuild (app) is a technical Windows 10/11 demonstrator to show a two handed keyboard in the air. It will work with up to 6 fingers but ideally 2 are optimal. Keyboard shortcut keys are also assignable.

Unzip all of the zip file's contents as it is in the folder, and run the program named MI3-In-Air-Keyboard-with-Ask-KITA.exe, or if you are using the non-speech version, MI3-In-Air-Keyboard.exe

In-Air Keyboard

(LEGACY) MotionInput 3 (MI3) Retro Gamepad (hit triggers in air) with Walking on the Spot and Speech

April 2022

This microbuild (app) is a technical Windows 10/11 demonstrator that enables you to play retro games with usual ABXY gamepad buttons, by hitting in the air. It has 3 modes to play; mode 1 - tap d-pad to set walking direction, mode 2 - hold d-pad to walk, and mode 3 - walking is always forward (W key). In order to walk in the game, you have to walk on the spot to enable the directional D-pad buttons.

Speech with Ask-KITA is included. Say Start Transcribe and Stop Transcribe to start and stop general text, otherwise it is in commands mode. Keyboard shortcuts can be added to events.json in the Data directory. Unzip all of the zip file's contents as it is in the folder, and run the program named MI3-RetroGamepad-withWalking.exe

Retro Gamepad with walking on the spot

(LEGACY) MotionInput 3 (MI3) D-Pad (hit triggers in air) with Walking on the Spot and Mouse for FPS games

April 2022

This microbuild (app) is a technical Windows 10/11 demonstrator that enables you to play First Person and 3rd Person 3D Games while walking. In order to walk in the game, you have to walk on the spot to enable the directional D-pad buttons. The dominant hand controls the mouse cursor (normally the viewpoint in those games).

Unzip all of the zip file's contents as it is in the folder, and run the program named MI3-Walking-FPS-with-Ask-KITA.exe, or MI3-Walking-FPS.exe (without Ask-KITA speech), respectively.

Walking on the spot for FPS games

(LEGACY) Download UCL Ask-KITA v1.0 (own application)

March 2022

This is the package for Ask-KITA on its own, as a federated speech application on Windows 10/11. It is powered by VOSK. It should not need installation or admin rights and has both transcribe and command modes available.

Unzip all of the zip file's contents as it is in the folder, and run the program named Ask-Kita-v1.0.exe

UCL MotionInput 3.0 for MAC

This early preview has mouse navigation and some speech commands.

Permissions required to use this MAC version are as follows: the app will prompt the user for permission of microphone and camera, however, for accessibility permission the user needs to go to System preferences -> security and privacy -> accessibility and give permission to the MotionInput application.

(LEGACY) MotionInput v2 - Sept 2021

If you wish to explore where we started from, download version 2 here.

OS, History and Demonstrations

- Windows 10: All preview featurs are in this version. New speech commands added to Ask KITA.

- Windows 11: Available but with limited testing, may have more errors.

For the following, please Contact Us for access.

- Linux: Hands, Face, Eyes (OpenVino) and Speech work. Exercise recognition has not yet been ported.

- Android: Hands only for now, summer teams are working on it.

- Raspberry Pi: Hands, Face and Speech work, eyes is being ported.

- Apple Mac: Hands and some limited speech commands work, an initial technical preview is available.

Libraries used in UCL MotionInput v3 and their respective licenses:

The UCL MotionInput Team - University College London, Department of Computer Science © 2022

FAQ

-

What hardware do I need to run this?

-A Windows 10 based PC or laptop, with a webcam! Ideally an Intel-based PC, 7th Gen and above, and 4GB RAM. An SSD is highly recommended. The more CPU cores the merrier! Running parallel ML and CV is highly compute intensive, even with our extensive optimisations - so for example in Pseudo-VR mode for an FPS game, you may be doing hands recognition of the mouse, walking on the spot recognition, and hit targets with body landmarks, with speech, simultaneously while rendering advanced game visuals. At its simplest end, doing simple mouse clicks in a web browser, should be much less intensive.

-

What platforms does this run on?

-Windows 10 for the full software, and Windows 10 and 11 for the Microbuilds of the stand-alone features. We are also developing the software for Linux, Raspberry Pi, Android and Mac. If you would like to help to test this with us, please email us.

-

How do I run the software?

-If you are running Windows 10 or 11, you can run the microbuilds of the features without any installation processes. Download the zip file and unzip to your PC, and run the MotionInput executable file. If you want to run the full software with the GUI front-end for settings, you will need Windows 10, and both Vigembus (for In-Air Joypad) and Dotnet 3.1 for Desktops (X64) installed. Follow the instructions on the download link.

-

- This is very much in the realm of sci-fi! Can I do gestures like in <insert hollywood film here> ?

-Reach out to us and your suggestions, and lets see what we can do!

-

-What motivated you to build this?

-Covid-19 affected the world, and for a while before the vaccines, as well as the public getting sick, hospital staff were getting severely ill. To keep shared computers clean and germ free comes at a cost to various economies around the world. We saw a critical need to develop cheap/free software to help in healthcare, improve the way in which we work and so we examined many different methods for touchless computing. Along the journey, several major tech firms had made significant jumps in Machine Learning (ML) and Computer Vision, and our UCL IXN programme was well suited to getting them working together with students and academics. Some of the tech firms also had let go of past products which would have been useful if they were still in production, but the learning from them was still there. At the same time, we also realised that childhood obesity and general population health was deteriorating during lockdowns. So we developed several project packages to specifically look at how to get people moving more, with tuning of accuracy for various needs. Especially in healthcare, education and in industrial sectors, we looked at specific forms of systems inputs and patterns of human movements, to develop a robust engine that could scale with future applications of use. The Touchless Computing team at UCLCS have a key aim of equitable computing for all, without requiring further redevelopment of existing and established software products in use today.

-

-What is Ask-KITA?

-Ask-KITA is our speech engine, much like Alexa and Siri. It is intended for teaching, office work and clinical/industrial specific speech recognition. KITA stands for Know-IT-All. It is a combinatorial speech engine that will mix well with motion gesture technologies and gives three key levels of speech processing - commands (such as turning phrases into keyboard shortcuts and mouse events), localised and offline live caption dictation without user training, delivering recognised words into text-based programs, and gesture combined exploration of spoken phrases.

-

-Whats next?

-We have a lot of great plans and the tech firm companies on board are excited to see what our academics and students will come up with next. Keep in touch with us, send us your touchless computing requests and especially if it can help people, we want to know and open collaborations.

- -Where can I find more FAQ answers?

Team

Our UCL CS Touchless Computing Team (March 2022), with thanks to Intel UK.

Contact us to give us feedback, to reach out to us, for software requests and for commercial licensing terms.

Contact Us

Our Address

Department of Computer Science, UCL

Gower Street, London WC1E 6BT

Email Us

ucl.ixn@ucl.ac.uk

UCL CS Homepage

https://www.ucl.ac.uk/computer-science/